devops

Automatically Publish a Repo as a PyPI Library with GitHub Actions

Just a config file, a few Python scripts, and a GitHub Actions workflow make publishing to PyPI effortless.

Introduction

Last year I had the opportunity to turn my company's API into a JavaScript SDK by relying on the project's openapi.yaml file and the OpenAPI Generator CLI.

Notehub JS

For the full details of how I did created Notehub JS, I encourage you to read the original blog post I published about it here.

I did this as much for myself as for other developers, because I and a group of my coworkers were building a lot of JavaScript-based web apps to display and interact with the IoT data our company Blues specializes in transporting from a device in the real world to the cloud via cellular. To make it easier to update the JavaScript library as the API it's based on continues to grow and evolve, I set up a bunch of GitHub Actions workflows to do most of the tedious, repetitive tasks for me, and I learned a bunch of useful new things along the way.

This year, one of my other coworkers who works frequently in Python, asked if I could create a Python SDK for the API, and I agreed, feeling pretty confident about most of the steps involved. One new thing I did have to learn was how to build and publish a Python package to the Python Package Index, PyPI.

In this blog, I'll show you how to set up a GitHub Actions workflow to automatically publish a new version of a GitHub project to PyPI when a new release is made - no muss, no fuss, very little manual input required.

Notehub Py

As with the original Notehub JS project, the Notehub Py project's structure is a bit different from your typical repo because it is automatically generated from the Notehub API's openapi.yaml file. The openapi.yaml file follows the OpenAPI specification standards, and can be used with the OpenAPI Generator CLI to build a Python-based SDK to interact with the Notehub API in just a few commands from a terminal.

Here's a simplified view of the Notehub Py repo's folder structure:

.

├── .github/

│ └── workflows/

│ └── GH Action files

├── lib_template/

│ └── python library template files

├── src/

│ ├── notehub_py/

│ │ └── Python-based API and model files

│ ├── docs/

│ │ └── MD documentation

│ ├── test/

│ │ └── unit tests

│ ├── dist/

│ │ └── bundled .tar and .whl binaries for PyPi

│ ├── pyproject.toml

│ ├── requirements.txt

│ └── setup.py

├── openapi.yaml

├── config.json

├── README.md

└── scripts.pyThe openapi.yaml file lives at the root level of the project along with its config.json file, a scripts.py file, and a few other other bits and pieces (license, contribution guidelines, code of conduct, etc.), but the real meat of the library lives inside of the src/ subfolder.

What's unique about this subfolder is that it is regenerated each time the openapi.yaml file is updated, and it has all the API endpoints, models, docs, and the dist/ folder for the notehub-py SDK that actually gets published on PyPI. Publishing just a subfolder of a project inside a GitHub repo is a little unusual, but not to worry, it can be done in an automated fashion.

Create a scripts.py and config.json file to automate generating the library and packaging it for distro to PyPI

To automate the build and publish steps to deploy Notehub Py to the PyPI registry, we need a couple of things: a config.json file and a scripts.py file.

The config.json file is a configuration file of additional properties used by the OpenAPI Generator and its Python library template to define certain variables like package name, package version, GitHub repo URL, etc. Here is what the Notehub Py's config.json file looks like.

{

"packageName": "notehub_py",

"packageUrl": "https://github.com/blues/notehub-py",

"projectName": "notehub-py",

"packageVersion": "1.0.2"

}Every time a new version of the Notehub Py library needs to be published to PyPI, the packageVersion for the project will be updated in this file, then the commands to regenerate the library and its distribution packages (which I'll cover next) gets run.

The scripts.py file is a set of reusable commands to automate the steps of updating this repo based on the latest version of the openapi.yaml, and packaging it up for publishing to PyPI.

import sys

import subprocess

import shutil

import os

def generate_package():

try:

subprocess.run([

"openapi-generator-cli",

"generate",

"-g",

"python",

"--library",

"urllib3",

"-t",

"lib_template",

"-o",

"src",

"-i",

"openapi.yaml",

"-c",

"config.json"

])

except Exception as e:

print("Exception when generating package: %s\n" % e)

def build_distro_package():

try:

os.chdir("src/")

# Check if the 'dist/' folder exists

if os.path.exists("dist"):

# If it exists, delete it and its contents

shutil.rmtree("dist")

# Upgrade the 'build' module

subprocess.run([

"python3",

"-m",

"pip",

"install",

"--upgrade",

"build"

])

# Generate a new 'dist/' folder

subprocess.run([

"python3",

"-m",

"build"

])

except Exception as e:

print("Exception when building distro package: %s\n" % e)

if __name__ == "__main__":

if len(sys.argv) != 2:

print("Usage: python3 scripts.py [generate_package | build_distro_package]")

sys.exit(1)

script_to_run = sys.argv[1]

if script_to_run == "generate_package":

generate_package()

elif script_to_run == "build_distro_package":

build_distro_package()

else:

print("Invalid script name. Use one of: generate_package, build_distro_package")

sys.exit(1) The first function, generate_package(), uses the subprocess module to run the openapi-generator-cli tool to generate a new version of the Notehub Py library. Let's break down each of the arguments in the command:

"generate": Specifies the action to generate code."-g", "python": Indicates that the target language for the generated code is Python."--library", "urllib3": Specifies that the generated code should use theurllib3library for HTTP requests."-t", "lib_template": Points to a template directory namedlib_templatethat contains custom templates for the code generation."-o", "src": Sets the output directory tosrc, where the generated code will be placed."-i", "openapi.yaml": Specifies the input OpenAPI specification file."-c", "config.json": Uses a configuration file namedconfig.jsonto customize the generation process.

The second function, build_distro_package(), builds the distribution package for the the Notehub Py project that will be deployed to PyPI.

First, the function checks if a dist folder exists inside the src folder where all the Notehub Py API code lives, and deletes the older version of the folder if it does exist to ensure any previous build artifacts are removed before adding a new distro package.

os.chdir("src/")

# Check if the 'dist/' folder exists

if os.path.exists("dist"):

# If it exists, delete it and its contents

shutil.rmtree("dist")Next, the function upgrades the build module to ensure the latest version is installed. This module is essential for generating the distribution packages.

subprocess.run([

"python3",

"-m",

"pip",

"install",

"--upgrade",

"build"

])Finally, the function generates a new dist directory by invoking the build module to create the distribution package, which includes both source distributions and wheel distributions.

subprocess.run([

"python3",

"-m",

"build"

]) Both of these functions can be invoked from the command line by running python scripts.py generate_package or python scripts.py build_distro_package.

Set up a PyPI account and library to publish to

Now that the Notehub Py config file and scripts to automate the generation and building of the SDK are done, it's time to prepare PyPI to receive the library.

Follow these steps:

- Create a PyPI user account if you haven’t done so already.

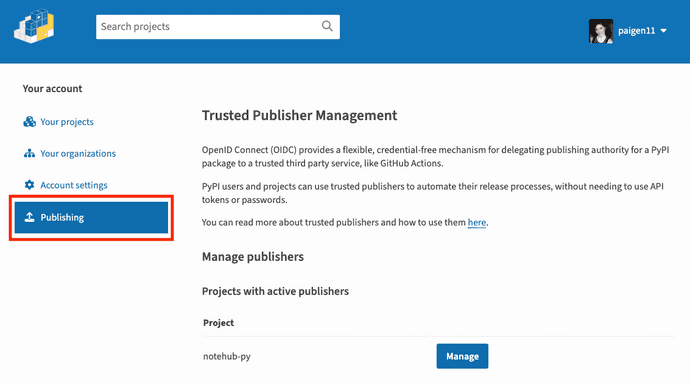

- After logging into PyPI, go to the “Account Settings” page and select the “Publishing” tab.

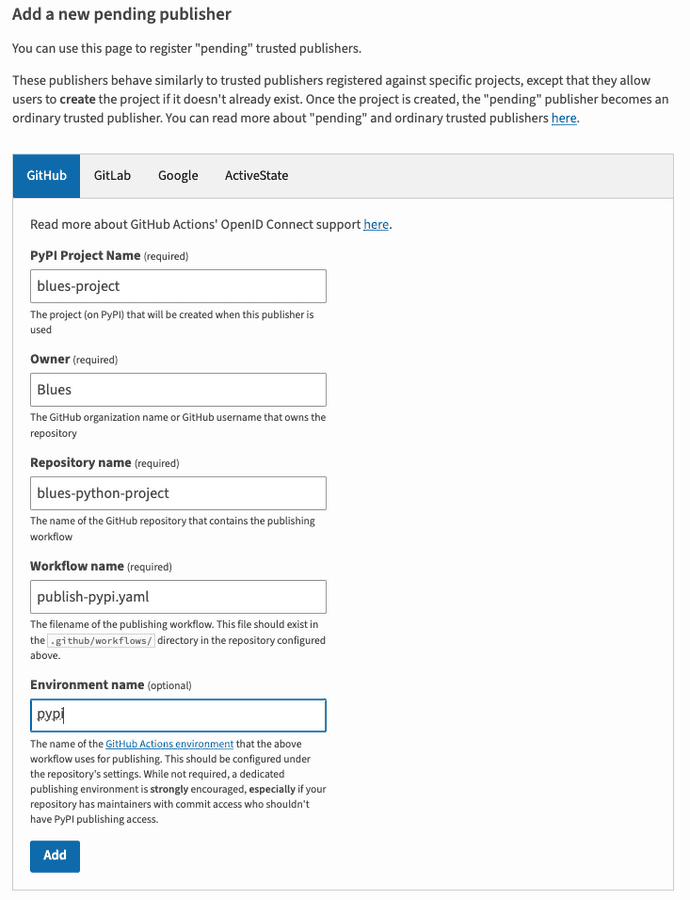

- Scroll down to the “Add a new pending publisher” section of the page, and input the details of your project you want to publish to PyPI: publisher platform (GitHub), project name to display on PyPI, project owner, repo name, publishing workflow name (something like

publish-pypi.yaml), and click the "Add" button when you're done.- Using PyPI’s trusted publishing option utilizes OpenID Connect (OIDC) technology to provide credential-free publishing authority to trusted third party services like GitHub Actions. This allows us to automate the release process without needing to use API tokens or passwords.

Now, we're ready for the final step in the process: a GitHub Actions workflow to automate publishing the newly generated distribution packages to PyPI.

Write a GitHub Actions workflow to publish to PyPI

Need a refresher on GitHub Actions?

If you want a quick primer on what GitHub Actions are, I recommend you check out a previous article I wrote about them here.

For me, it made the most sense to trigger a GitHub Actions workflow by creating a new release in GitHub to publish the Notehub Py src/ subfolder to PyPI.

GitHub specifically defines a release as a deployable software iteration that you can package and make available for a wider audience to download and use, which is exactly what I want.

Once I'd chosen this as the trigger for my workflow, it was a pretty straightforward set of steps to publish to PyPI.

Here's what the finished publish-pypi.yml file looks like inside of the ./github/workflows/ folder - I'll break it all down below.

name: Upload Python Package

on:

release:

types: [created]

jobs:

deploy:

runs-on: ubuntu-latest

environment:

name: pypi

url: https://pypi.org/p/notehub-py

permissions:

id-token: write # IMPORTANT: this permission is mandatory for trusted publishing

defaults:

run:

working-directory: ./src

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Set up Python

uses: actions/setup-python@v5

with:

python-version: "3.x"

- name: Install dependencies

run: |

python3 -m pip install --upgrade pip

pip install build

- name: Build package

run: |

python3 -m pip install --upgrade build

python3 -m build

- name: Publish package to PyPI

uses: pypa/gh-action-pypi-publish@release/v1

with:

packages-dir: ./src/dist/Each GH Actions workflow file needs a name, so I chose: Upload Python Package. It tells users exactly what this script's purpose is.

As I said earlier, this workflow is triggered whenever a new release is created in GitHub, which is where the following lines take effect.

on:

release:

types: [created]onis how a workflow is triggered.releaseis the event that triggers the workflow.types: [created]is the activity type for areleaseevent that triggers the workflow. This gives us more fine-grained control of when the workflow should run.

Then the jobs section runs inside of the workflow. This particular script only has one job, deploy, but if there's multiple jobs, they'll run sequentially unless otherwise specified.

The deploy job defines that it runs on the latest version of Ubuntu in runs-on.

The job is configured to operate within an environment named pypi, with a URL that points to the PyPI project page for notehub-py, and the permissions section grants the job the necessary id-token: write permission, which is required for trusted publishing to PyPI. All of this will correspond to the details filled out in PyPI in the previous section setting up the trusted publishing option via OpenID Connect.

environment:

name: pypi

url: https://pypi.org/p/notehub-py

permissions:

id-token: write # this permission is mandatory for trusted publishingThe defaults.run section sets the working directory to ./src for all run steps, ensuring the commands are executed within the source subdirectory of the project.

Finally, we get to the steps.

The steps are as follows:

- Check out the code so the workflow can clone the repository's code into the runner with

actions/checkout@v4. - Set up a Python environment with version

3.xusingactions/setup-python@v5. - Upgrade

pipand install thebuildmodules necessary for building the distribution package. - Build the distribution package using

python3 -m build. - Publish the built packages to PyPI using the

pypa/gh-action-pypi-publish@release/v1action. Thepackages-dirparameter specifies the directory containing the distribution files (./src/dist/).

And there you have it: each time a new release is created in the Notehub Py repo, this GitHub Actions workflow will run and deploy the updated code to PyPI.

Conclusion

After publishing my first JavaScript SDK of my company's API on npm last year, I was asked to build a similar SDK in Python and distribute it to PyPI.

I was able to repurpose a lot of the same steps and workflows I used for generating the JavaScript library to generate the Python library, but configuring the deployment to PyPI was a bit different as that package platform recommends using trusted publishing to deploy new package versions.

With just a few Python scripts, a config file, and a little bit of set up on the PyPI site, I was able to deploy the auto-generated subfolder inside of the Notehub Py project to PyPI, helping developers more easily interact with the Notehub API. GitHub Actions allowed me to build the code, package it up for distribution, and publish it to PyPI in no time.

Check back in a few weeks — I’ll be writing more about the useful things I learned while building this project in addition to other topics on JavaScript, React, IoT, or something else related to web development.

Thanks for reading. I hope learning how to deploy a subfolder of a project to PyPI through GitHub Actions workflows comes in handy for you in the future. Enjoy!

References & Further Resources

- Notehub Py GitHub repo

- notehub-py SDK on PyPI

- OpenAPI Generator CLI

Want to be notified first when I publish new content? Subscribe to my newsletter.